Implementation of artificial intelligence in the various areas of industry is associated with a necessity of forming the knowledge in the most complex projects with ever-growing amount of data.

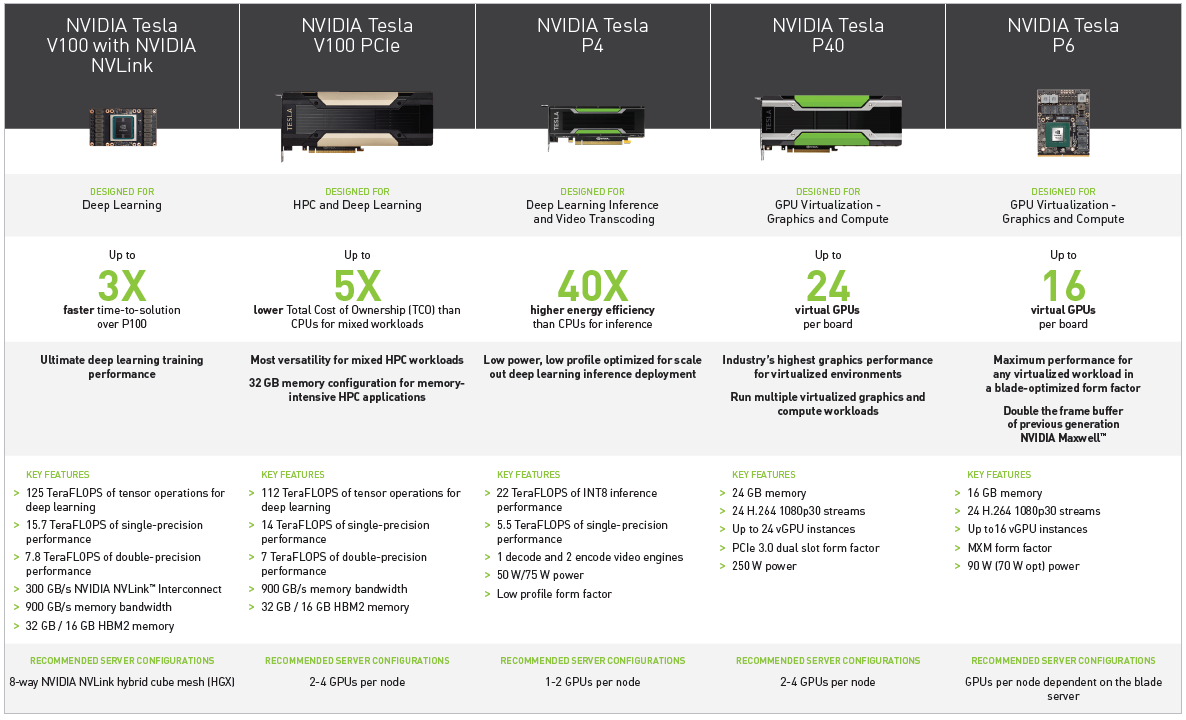

In order to accelerate this adoption, the data centers should use the latest and highest performing hardware and that hardware includes the NVIDIA® Tesla®V100 GPU.

Artificial Intelligence and High Performance Computing

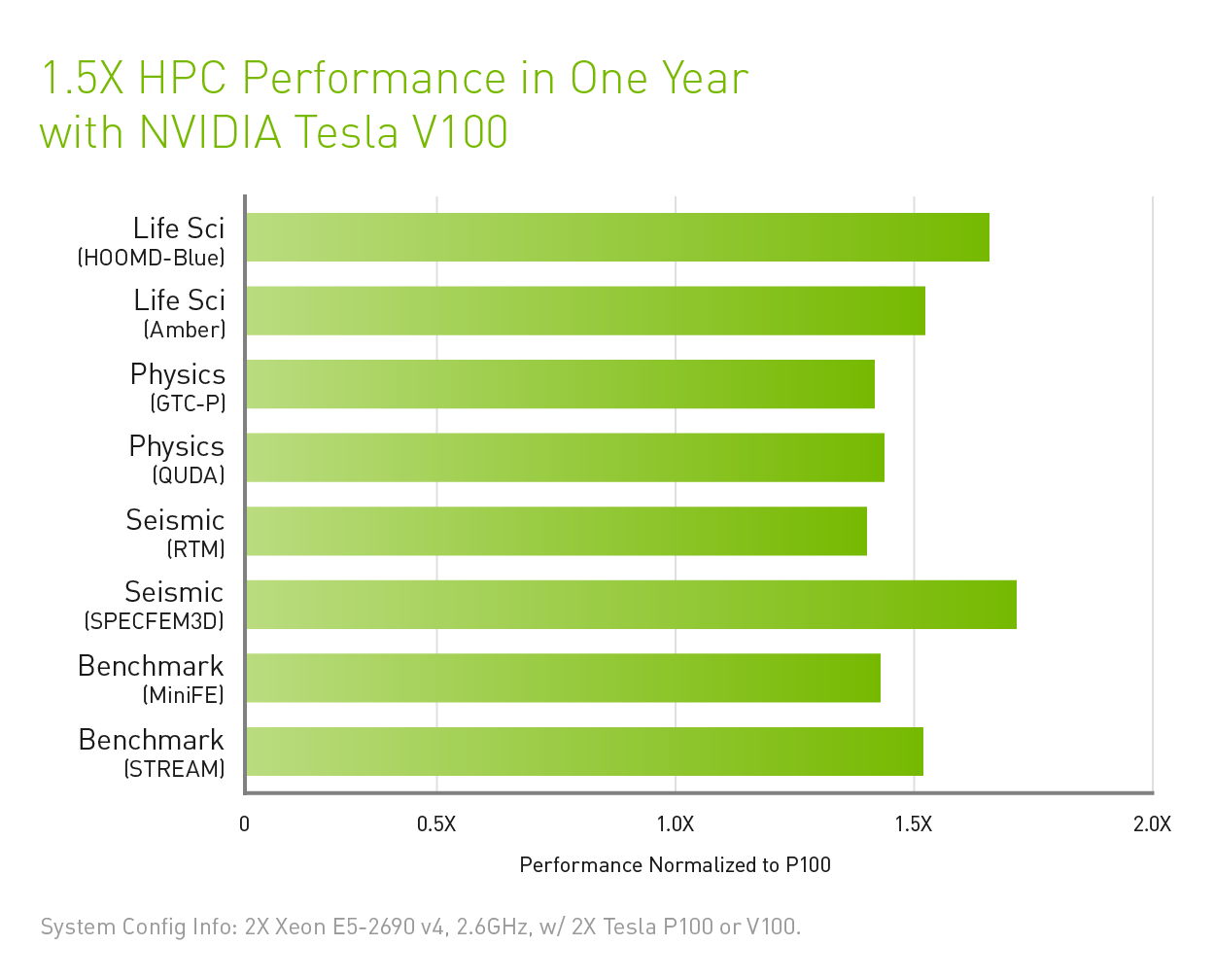

The NVIDIA® Tesla®V100 is a Tensor Core GPU model built on the NVIDIA Volta architecture for AI and High Performance Computing (HPC) applications. It is one of the most technically advanced data center GPUs in the world today, delivering 100 CPU performance and available in either 16GB or 32GB memory configurations. The Tesla V100 has been upgraded to 100 tera-operations per second (TOPS) and combined with multiple NVIDIA NVLink™ GPUs, the V100 delivers 300Gb/s throughput to build high-performance computing servers and the supercomputers. For example, one server equipped with a Tesla V100 GPU and consuming 13 kW of power provides the same performance in inference tasks as 30 CPU servers. This leap in a performance and in an energy efficiency is fueling the scaling up of AI services.

NVIDIA Tesla V100 PCI-e Form-factor

NVIDIA® Tesla®V100 is used to solve AI-related tasks such as speech recognition, building virtual assistants, training complex neural networks in a short time, as well as applying artificial intelligence in high-performance computing analyzing the large amounts of data or running the simulations.

Tesla V100 key features

NVIDIA® Tesla®V100 key features include:

- the updated design of a streaming multiprocessor (Streaming Multiprocessor, SM) optimized for solving the deep learning problems, and also received greater (up to 50%) energy efficiency due to the introduction of the architectural changes. These innovations improved a performance of FP32 and FP64 computing at the same level of power consumption. In addition, the new Tensor Cores, designed specifically for training and inferencing neural networks in deep learning tasks (deep learning), provided to Tesla® V100 a 12-fold speed advantage in neural network training and mixed-precision calculations. Integer and floating point calculations run on the independent parallel threads now, improving Volta's efficiency in the mixed workloads. New combined L1 cache and Shared Memory subsystem significantly improves performance in some tasks, at the same time simplifying their programming;

- support a second generation of NVLink high-speed connection technology, allowing increase a bandwidth, provide more data lines and improve scalability for the systems with multiple GPUs and CPUs. New GV100 processor supports up to six 25Gb/s NVLink links for a total throughput of 300Gb/s. The second version of NVLink also supports new features of servers based on IBM Power 9 processors, including cache coherence. The new version of the NVIDIA DGX-1 supercomputer, based on the Tesla V100, uses NVLink to provide better scalability and ultra-fast training of neural networks in the deep learning tasks;

- High-performance and efficient 16GB HBM2 memory delivers a peak memory bandwidth up to 900GB/s. Combining Samsung's fast 2nd generation memory with an improved memory controller in the GV100 provides a 1.5x increase in a memory bandwidth compared to the previous Pascal-based GP100 chip, while the new GPU's effective bandwidth usage reaches over 95% in real workloads;

- a Multi-Process Service (MPS) feature allows multiple processes to share the same GPU. Volta architecture hardware accelerates the critical components of CUDA MPS server to improve a performance, isolation and better quality of service (QoS) for the multiple computing applications using a single GPU. Volta also tripled the maximum number of MPS clients from 16 for Pascal to 48 for Volta;

- improved shared memory and address translation. In the GV100, shared memory uses the new pointers to allow memory pages to be moved on a processor that accesses those pages more frequently. This improves the efficiency of the accessing memory ranges shared between different processors. When using IBM Power platforms, new Address Translation Services (ATS) allow the GPU to access CPU pages directly;

- collaborative teams and new APIs for collaborative launch. Cooperative Groups is a new programming model introduced in CUDA 9 for organizing groups of the related threads. Collaborative groups allow developers to specify the granularity that threads communicate, helping to organize more efficient parallel computing. The core functionality of collaborative groups is supported with all company's GPUs and support new synchronization patterns that has been added to Volta;

- maximum performance and maximum energy efficiency modes allowing use GPU more efficiently in the various cases. In the maximum performance mode, the Tesla V100 accelerator will operate without frequency limitation when power consumption is up to a TDP level of 300W. This mode needs the applications requiring the highest computing speed and the maximum throughput. Maximum efficiency mode allows adjust a power consumption of Tesla V100 accelerators. So, you get the best return in terms of each watt’s power consumption. Moreover, you can set an upper bar for power consumption of all GPUs in a server rack, reducing power consumption maintaining a sufficient performance;

- optimized software. Due to it, the new versions of deep learning frameworks such as Caffe2, MXNet, CNTK, TensorFlow and others can use all features of Volta in order to significantly increase training a performance and reduce a training time of neural networks. Volta-optimized cuDNN, cuBLAS, and TensorRT libraries are able to leverage the new capabilities of Volta architecture to improve a performance of deep learning and standard HPC applications. The new version of CUDA Toolkit 9.0 already includes new and optimized APIs with support for Volta functions.

Such features of Volta accelerator make it possible to improve a performance of the neural networks by several times, reducing a time for preparing artificial intelligence algorithms for work.

Tesla V100 architecture

NVIDIA engineers made a number of changes to Tesla® V100 hardware architecture that affected to GV 100 chip and streaming multiprocessors. New GV 100 consists of the multiple memory controllers and Graphics Processing Clusters (GPCs) including Texture Processing Clusters (TPCs). In turn, TPC clusters consist of the multiple Streaming Multiprocessors (SMs).

NVIDIA Tesla V100 SXM2 Form-factor

Full version of Volta architecture compute processor contains six GPC clusters and 42 TPC clusters, each of which includes two SM multiprocessors. It means there are total of 84 SM multiprocessors in a chip, each containing 64 FP32 cores, 64 INT32 cores, 32 FP64 cores, and 8 new tensor cores specialized in neural network acceleration. Also, each multiprocessor contains four TMU texture units.

With 84 SMs, the GV 100 has a total of 5,376 FP32 and INT32 cores, 2,688 FP64 cores, 672 Tensor cores, and 336 texture units. For accessing a local video memory GPU has eight 512-bit HBM2 memory controllers providing together a 4096-bit memory bus. Each fast HBM2 memory stack is controlled by its own pair of memory controllers, and each of the memory controllers is connected to a 768KB L2 cache partition, giving the GV 100 a total of 6MB of L2 cache.

It is worth noting that technically a new GV 100 chip is compatible with the old one. It has been made for speeding up a production and introduction of the new items that can be used with the same motherboards, power systems and other components.

Computing capabilities

Tesla® V100 received its updated computing capabilities due to the release of a new version of the software platform for computing on GPU - CUDA 9. This version of the package fully supports Volta architecture and Tesla V100 accelerator. Also, it has initial support for the specialized tensor cores providing a large speed boost for the mixed-precision matrix operations that are widely used in the deep learning problems.

GV100 Computing Processor also supports a new level of computing capability - Compute Capability 7.0. In addition, CUDA 9 has accelerated libraries for linear algebra, image processing, FFT and others, improvements in the programming model, unified memory support, compiler and developer tools.

Tesla®V100 Specifications

Comparative characteristics of Tesla® V100 specifications presented in the form of table. Maximum power consumption of Tesla V100 for the servers with NVLink is 300 W, of Tesla V100 and Tesla V100s of PCIe servers - 250 W.

Summarizing

Fusing HPC and AI, the NVIDIA Tesla V100 accelerator excels both in computing during the running simulations and in processing data to extract useful information from them. This is a new driving force behind artificial intelligence.

Specialists of our company are ready to help you purchase the server and select the necessary server configuration for any required task.