- Performance: from 2nd generation Intel Xeon to AMD EPYC Bergamo

- Compiling the Linux 4.4.2 kernel into Python

- The c-ray benchmark

- SPEC CPU2017

- Performance in STH Nginx CDN content distribution networks

- Pricing analysis in MariaDB

- Virtualization in STH STFB KVM environment

- Power consumption: Intel Xeon (2nd generation) vs AMD EPYC Bergamo servers

- AMD EPYC Bergamo series: key results for the beginning of 2024

- Conclusion

In 2023, artificial intelligence became the most pressing topic for data centers. However running a large number of traditional applications on expensive AI servers is a completely illogical decision. Most of such apps are currently running on Intel Xeon Scalable “Cascade Lake” (2nd generation) or other server platforms (starting with the AMD EPYC 7002 “Rome” generation, widely used in 2019). The latest AMD EPYC processors are designed to replace older generation servers. The unique advantages of the generation of energy efficient AMD EPYC “Bergamo” cloud processors in terms of server consolidation will be discussed in this article. In this article, we will use Supermicro servers as a subject of consideration, however, with much higher consolidation rates, since the AMD EPYC Bergamo series chips were originally developed as specialized cloud processors designed for completely opposite purposes.

The 2nd generation Xeon Cascade Lake platform will be considered a competing analogue in this article. We chose this model for comparison since in the middle of 2021 it was the most preferred solution in the mass category (besides, the popularity of the 3rd generation Xeon “Ice Lake” did not come immediately). We are not considering AMD EPYC in this case since the sale of EPYC 7001 series models is limited. As for the 2nd generation EPYC 7002 series, this solution was steadily gaining popularity; however, more than 90% of users decide to upgrade the 1st or 2nd generation Xeon Scalable only after 3-6 years of active use.

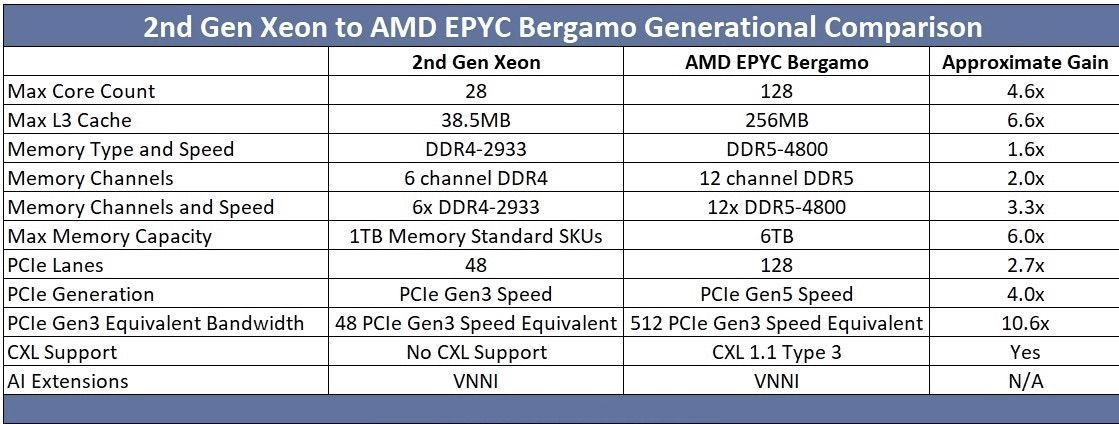

Below is a comparison table of the main characteristics of the top 2nd generation Intel Xeon and AMD EPYC Bergamo processors.

If you're still using hardware released before 2021, note that Intel processors already include the VNNI AI Inference Extension Pack. Our comparison can be considered almost equal in all respects, since the processors in question do not have accelerator blocks such as QuickAssist, AMX, etc. There is only one point: the Zen 4C core is a bit faster.

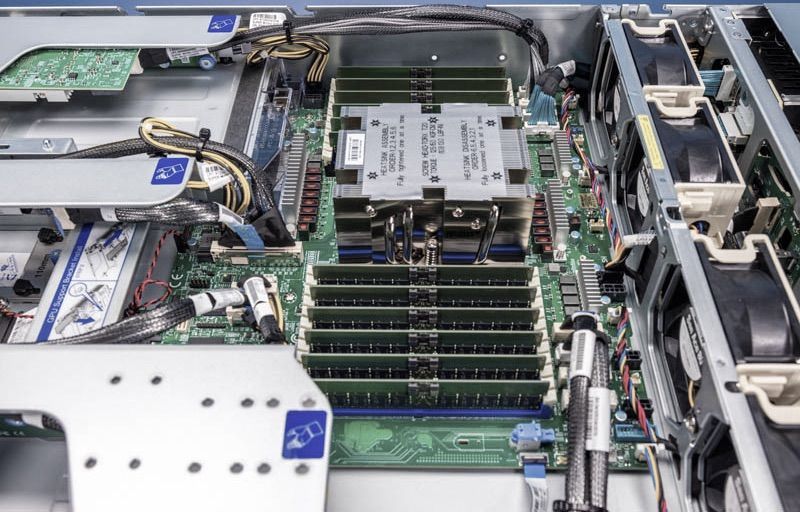

So, here we have a Supermicro AS-2015HS-TNR single-socket server. Considering the performance parameter, one AMD EPYC 9754 processor is equivalent to 4-4.5 top-end processors starting from 2021 for most applications. This means that two top-class two-socket servers can be replaced with one single-socket server, which will provide the most important advantages of single-socket systems: low power density and high operational efficiency.

However, despite consolidation, single-socket platforms also have significant I/O potential. The two-socket servers noted above support a total of 192 PCIe lines (PCIe Gen3). In a typical 2021 architecture, there was one 100 GbE network card per processor that supported PCIe Gen3 x16 slots (64 lines in total). The Bergamo chip provides support for a single 400GbE NIC card connected to a PCIe Gen 5x16 slot. The Bergamo chip can deliver the same throughput with four times fewer links and four times fewer network cards.

The move to PCIe Gen5 is not the only change in the I/O part. More modern expansion cards (for example, OCP NIC 3.0) are now supported.

NVMe SSDs with PCIe Gen5 are gradually becoming widely available and gaining more support. Despite the fact that they are slow to come to market, the plus is that these disks already provide 4 times higher bandwidth than previous generations of disks. The ability to increase drive capacity many times over in a single upgrade cycle is achieved through the significantly increased capacity of SSDs.

There is also a reason why in our analysis we're focusing more on single-socket solutions rather than dual-socket servers with Bergamo, although they can provide an opportunity to almost double data flows. The AMD EPYC Bergamo processor supports 12 DDR5 lines. Each line may have two channels, which together makes it possible to connect 24 DDR5 DIMMs to each processor. For example, a two-socket Xeon Scalable server of the 1st or 2nd generation can include 12 DDR4 DIMMs per processor (24 memory modules in total). The physical complexity of placing 24 DIMM modules (per processor) in a two-socket Bergamo server is the main problem here.

A standard server may include 1 CPU socket with 24 DIMM slots (or, alternatively, 2 CPU sockets with 12 DIMM slots each), giving a total of 24 DIMM slots. However, it is impossible to fit two processors and 48 DIMMs into a 19-inch case without a large motherboard custom-made specifically for deep cases. The CXL 1.1 Type-3 device can be used to connect additional memory to new servers, but this modern technology is still in testing.

Thus, the AMD EPYC Bergamo generation of processors can provide the following benefits:

-

Faster PCIe Gen5 interface;

-

4.5 times more cores;

-

Modern expansion card formats (for example OCP NIC 3.0);

-

When using single-socket servers, it is possible to fully utilize 1U or 2U rack space.

All these benefits can be offered due to the ability of AMD EPYC Bergamo chips to achieve the highest level of performance on every socket every time.

Performance: from 2nd generation Intel Xeon to AMD EPYC Bergamo

Through careful analysis and application of Supermicro statistics (which may have been influenced by a number of large customers), we chose the 24-core Intel Xeon Gold 6252 processor for our review.

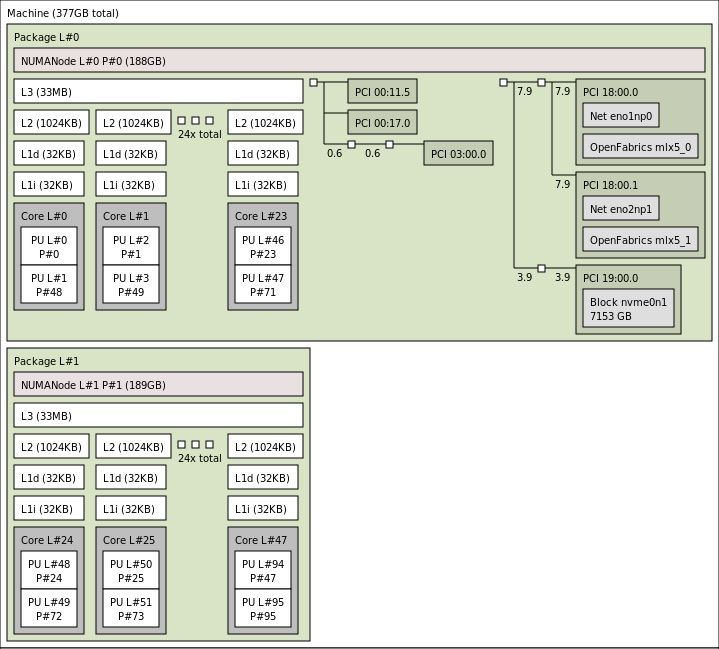

The topology of a dual-processor server based on these chips is shown below.

In general, the number of cores varies from 112 to 128 in the AMD EPYC Bergamo line. In our case, a 128-core chip with SMT will be used.

The system topology is noticeably simplified since all processor cores and PCIe devices are connected to a single I/O chip (IO Die). In a dual-socket server, such as one with a single high-speed NIC, data typically passes through an intersocket link before reaching the network. In a single socket system, each virtual machine, container, or app gets direct access to the network card. The same applies to SSDs, so dual-socket servers using 100 percent utilization of the disk subsystem can experience performance issues when memory and intersocket bandwidth reaches its maximum.

Many enterprises allocate a certain number of cores for virtual machines and do not use them to their full potential. Let's imagine a situation where a virtual machine with two or four virtual processors (vCPUs) is allocated for an application, which for a long time run on only 5% of the power of these cores. For a company that uses a fixed number of cores, the consolidation ratio when switching from the Gold 6252 platform in 2021 (24-core processors) to the EPYC 9754 platform in 2023 (128-core processors) will be 5.3:1. The performance metrics of each core are also very important, as certain applications use pure processor performance.

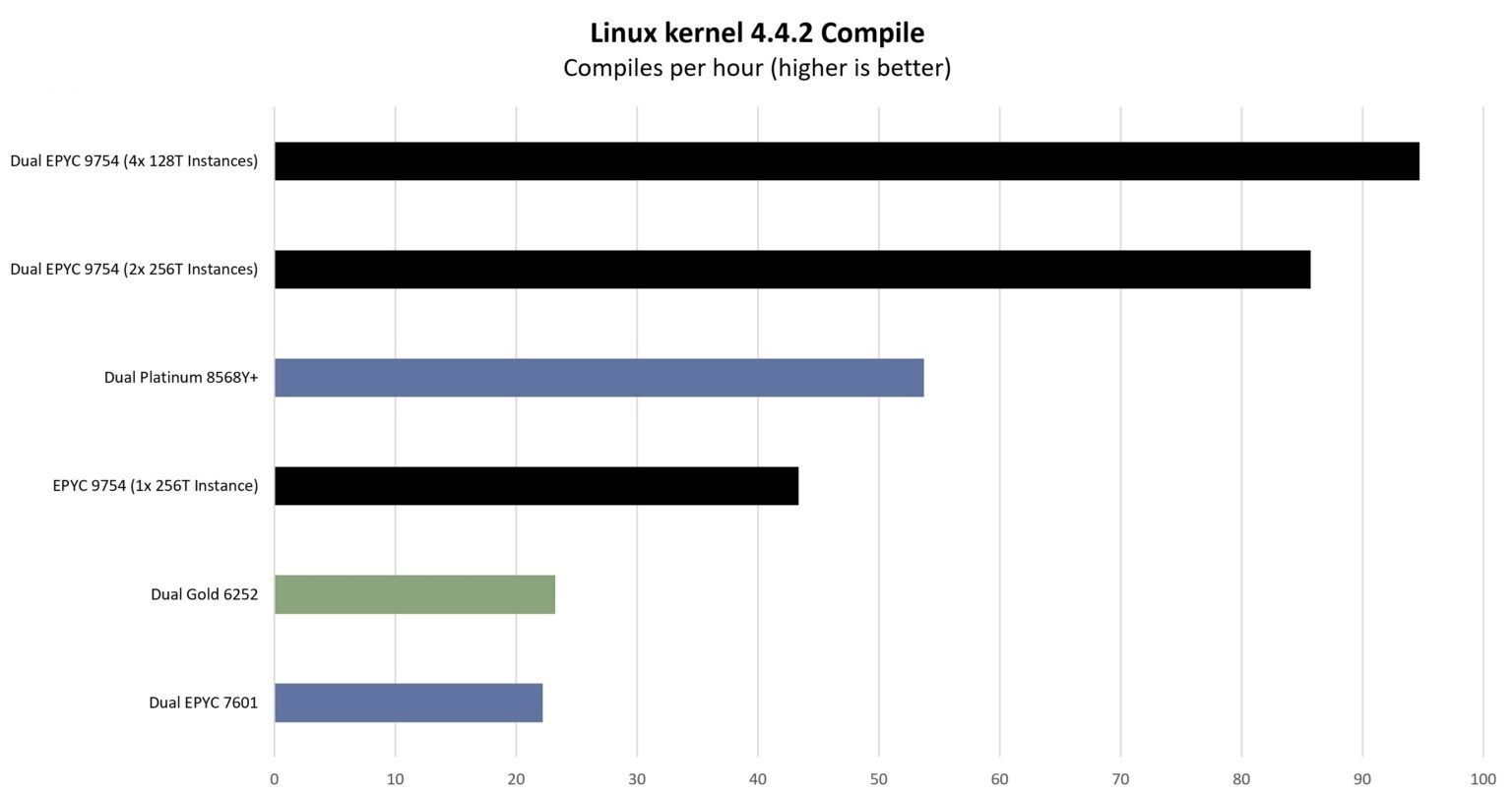

Compiling the Linux 4.4.2 kernel into Python

This is one of the most popular benchmarks in recent times. The process is quite simple: you need to take the standard Linux 4.4.2 kernel configuration file (from kernel.org) and run the automatic configuration process with the participation of all system threads. The results are expressed in compilation units per hour.

Here the socket performance value is not four times higher, but quite close to that. Note one of the common problems of modern processors: running one application on all processor cores causes "bottlenecks" when the load falls on one thread. Applications that scale almost completely linearly, for example, to 32 threads, 64 threads, etc., may not in all cases move to 256 processor threads (or, alternatively, to 512 threads with two CPUs).

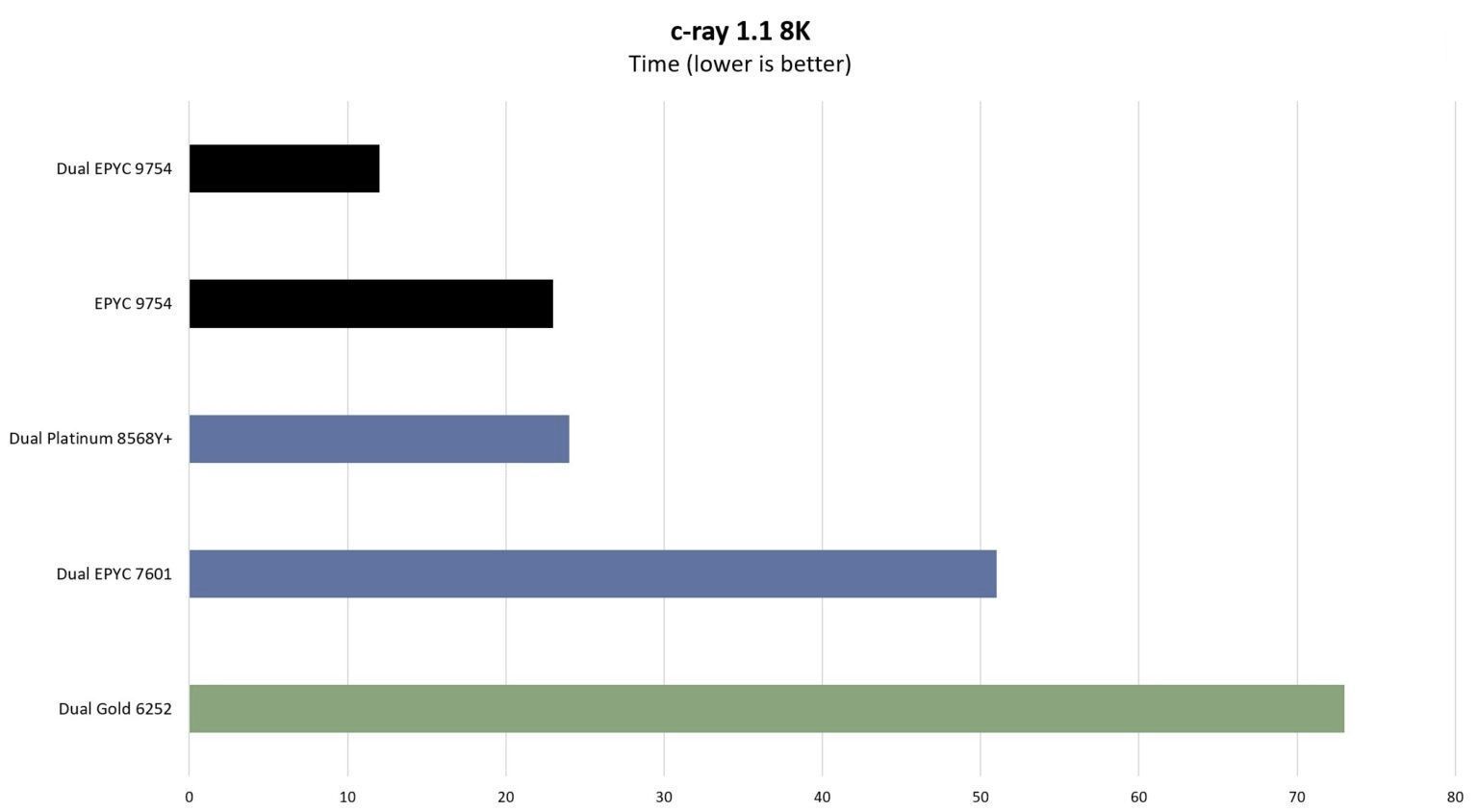

The c-ray benchmark

Benchmark c-ray is a program that performs ray tracing. Ray tracing is a type of multi-threaded workload that is very often used to distinguish processors based on performance. Below are the results obtained at 8K resolution.

It should be noted that AMD's chip architecture is used to be more efficient in tests like these, thanks to its cache: Zen 4C chip has slightly less cache than the Zen4, but still has more L3 cache compared to standard second-generation Intel Xeon chips. It is worth recalling that the volume of the L3 cache is determined in relation to the number of cores.

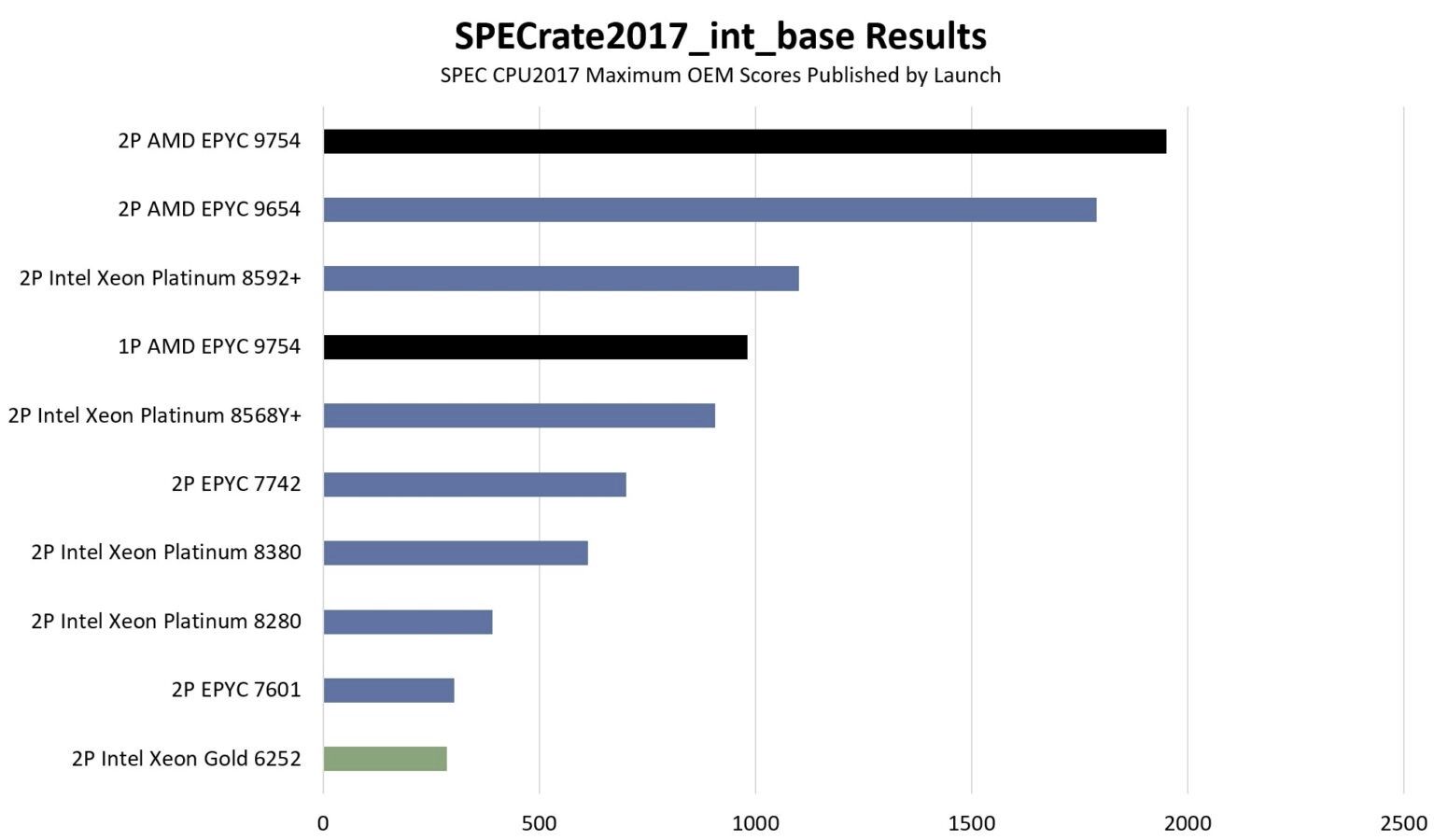

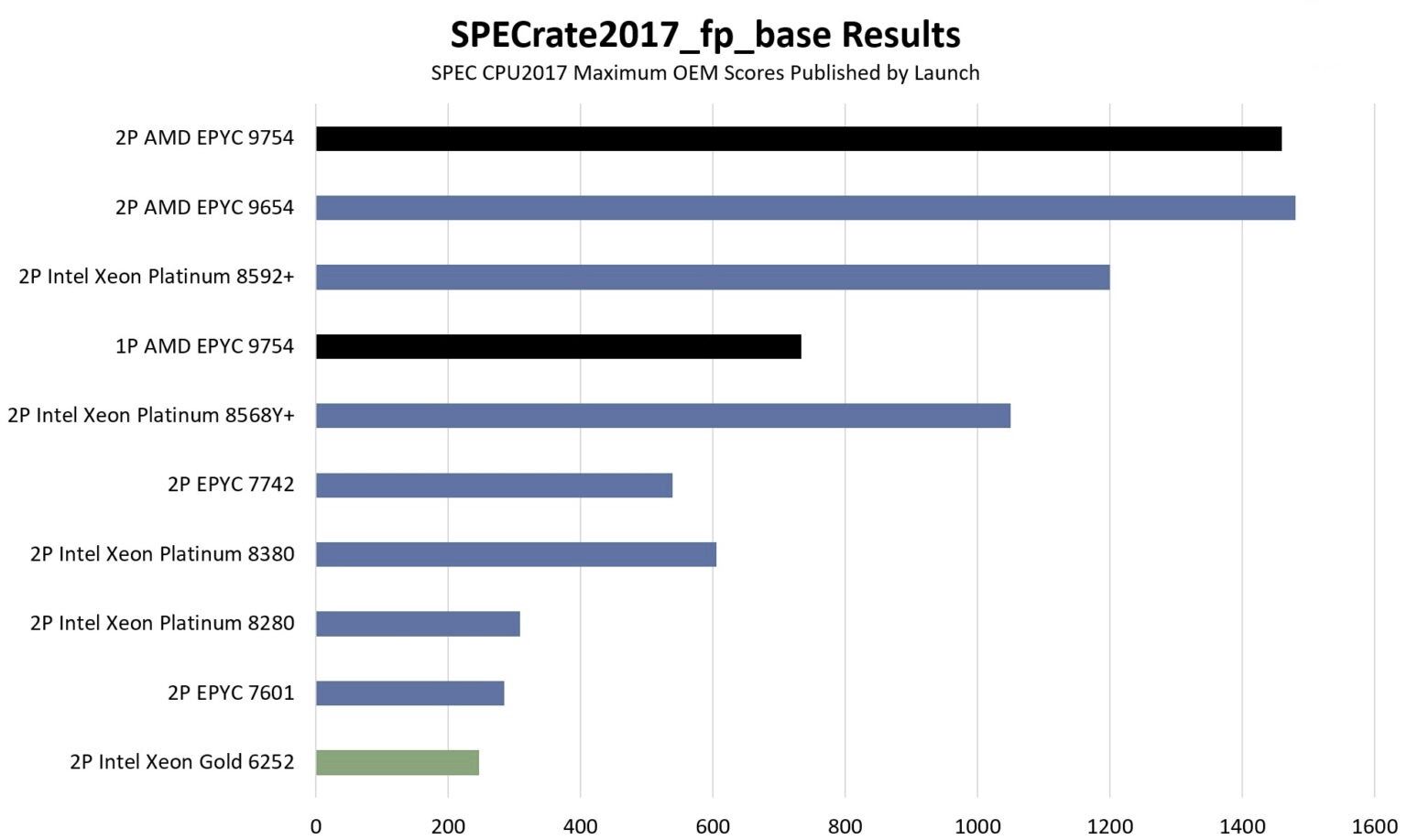

SPEC CPU2017

SPEC CPU2017 is perhaps the most common benchmark used when preparing RFP for servers. We've been running our own tests in SPEC CPU2017 for quite a long time, are typically several percent lower than those officially published by OEMs. The difference is always around 5% because OEMs intentionally optimize their systems for these important benchmarks. Now that the official results of testing the EPYC Bergamo server have become known, it is appropriate to mention them in the RFP.

To begin with, let's pay attention to the performance of integer arithmetic in SPEC CPU2017 – the most common benchmark for corporate and cloud apps.

Next, let's consider actual calculations.

The results of the top models of each generation are presented in our review for a complete comparison example. And although the two processors that we chose for comparison in this article are not top-end, each of them occupies one of the leading positions in its line.

A single-socket server with an AMD EPYC 9754 processor outperforms a dual-socket Intel Xeon Gold 6252 system in terms of efficiency by almost 2 times. When applying these results as expected ones, a consolidation ratio of about 5:1 can be achieved, which is very impressive.

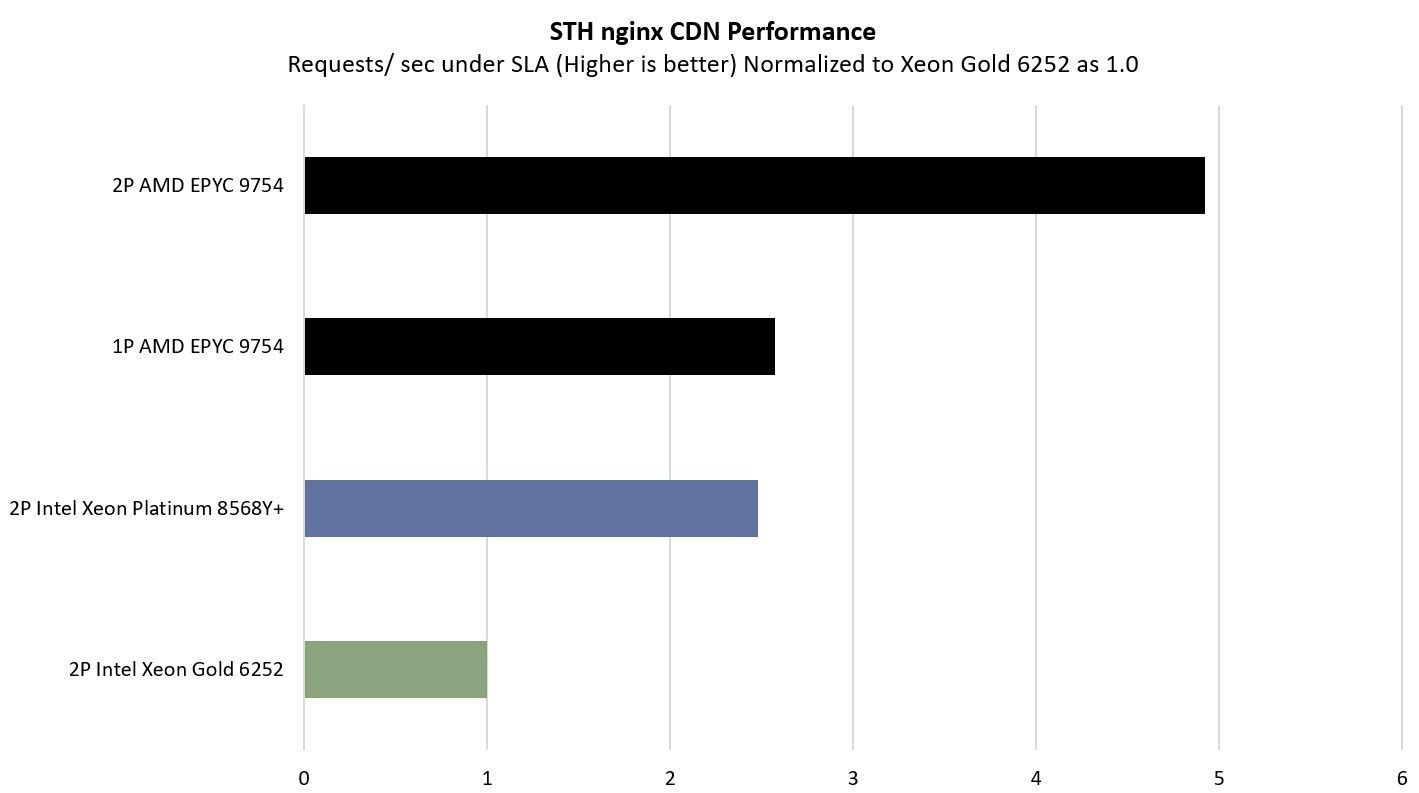

Performance in STH Nginx CDN content distribution networks

In the Nginx CDN test, to illustrate performance in terms of data transfer from disk drives, we use an old snapshot and an access template from the STH website, with DRAM caching disabled. Low latency of Nginx operations, as well as low latency at the additional stage of access to the I/O interface are necessary for these purposes. Below you can see what the distribution speed looks like:

Even single-socket platforms show a high level of efficiency here. This also applies to web page processing, which is one of the main goals of AMD and Arm-based server developers.

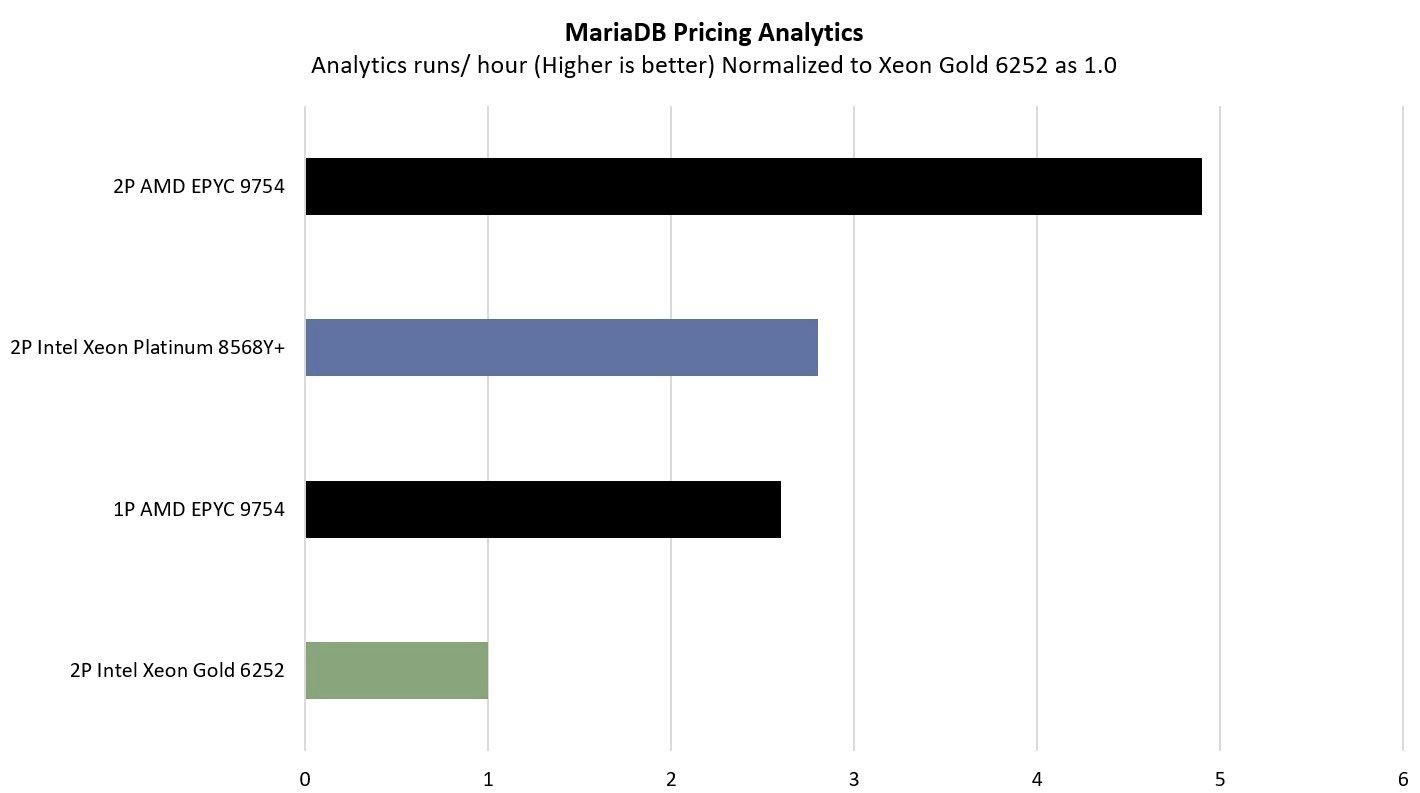

Pricing analysis in MariaDB

The workload-based test is an application for analyzing pricing aspects with the use of anonymous data obtained from one of the largest and most in-demand manufacturers of ready-made data center solutions. The application is capable of analyzing multi-parameter price trends in real time, taking into account regional data, as well as data from product lines and supply chains, in order to determine whether a list of specific elements of devices is profitable or not. This type of application can be considered as a full-fledged example of a scenario that real organizations can use in the cloud.

Here, socket performance has increased more than five times. This is achieved by increasing the number of cores and cache volume, as well as by using improved cores.

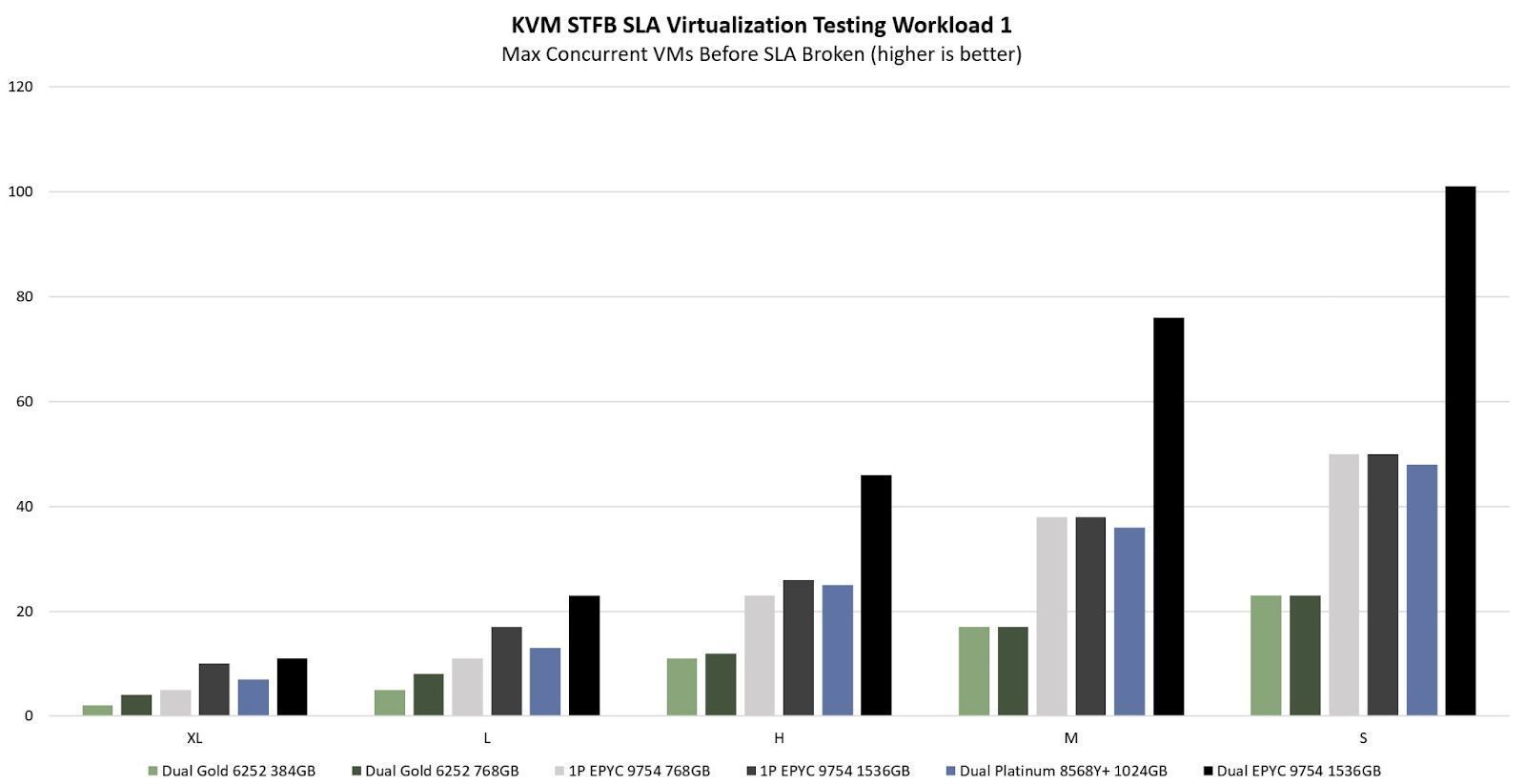

Virtualization in STH STFB KVM environment

Next, we are going to consider another example of an application (it belongs to closed sources), where the work scenario involves the use of a KVM (Kernel-based Virtual Machine) virtualization system in order to determine the possible number of parallel running VMs in online format during the time required to execute work in accordance with the terms of the SLA (Service Level Agreement). Each VM functions independently. From the point of view of the problem being solved, it has significant similarities with VMware VMark, the only point is that the scenario using KVM is more common.

When it comes to virtualization, there are limits to performance metrics from both the memory capacity and processor side. On small VMs, the use of 24 32 GB DIMM modules in a single-socket AMD EPYC 9754 system will show almost the same level of performance as in the case of using 24 64 GB modules on even smaller VMs, however, on large VMs the difference will be noticeable. In virtualization programs, memory capacity is essential. It's worth noting that the cloud environments for which Bergamo's EPYC chips are being developed include a wide range of small VMs. Here there are virtual machines with 2-4 virtual processors and fairly low memory requests: 4-8 GB for each vCPU. Some apps may require more cores, so 128 cores per socket will definitely come in handy.

The following conclusion can be drawn: it is always necessary to take into account the memory capacity during such an upgrade. On small VMs you can notice that a 128-core single-socket platform is capable of providing better performance than two servers based on Intel Xeon Gold 6252. This platform can ensure a consolidation ratio of 4:1 or higher.

Power consumption: Intel Xeon (2nd generation) vs. AMD EPYC Bergamo servers

Now we are going to compare the power consumption of two Supermicro servers.

The 2nd generation Intel Xeon system consumes 80-100 W when idle; for comparison, a single-socket AMD EPYC 9754 server consumes 90-120 W when idle, i.e. power consumption indicators are almost the same. However, under heavy load, many older generation servers often reached the 600-700 W mark, while the single-socket AMD EPYC 9754 server never reached a power consumption of more than 500 W.

This is where the critical difference comes in: AMD's single-socket EPYC 9754 system delivers 3-5 times more socket performance. Moreover, the new cloud system has a tendency to reduce the overall level of energy consumption, when compared with the most common two-socket platforms of 2019-2021. The performance indicators noted above are achieved at 29-50% of power consumption compared to previous generation platforms.

5:1 consolidation means that the virtual infrastructure of five dual-socket Xeon Gold 6252 servers can be migrated to one dual-socket AMD EPYC 9754 server. This method will make it possible to free up 80% of the rack space and save up to 2.5 kW of power.

AMD EPYC Bergamo series: key results for the beginning of 2024

Let's figure out in practice what 128 cores per socket means. In our review, the widespread Supermicro platform based on Cascade Lake Xeon is considered as an example of possible upgrade in a 3-5-year operational cycle. If 15 such servers were purchased three years ago, now they can be combined into three two-socket EPYC servers with the ability to reduce rack power consumption by 7-8 kW (which is enough to power a modern AI server with eight GPUs). An effective and convenient solution for companies that have sufficient electrical power and additional premises, as well as plan projects in the field of artificial intelligence for this year is the following scheme: 15 outdated servers need to be replaced with 3 new ones, freeing up space and a share of power for a modern AI server.

In addition to specialized cloud components, AMD provides a wide range of processors that can be used in other fields of activity. Such processors include the Genoa Universal series and the Genoa-X series for higher-performance computing. The main advantage of Bergamo is that it is a cloud processor hat maintains a high level of socket efficiency and provide an advanced functionality. Proper configuration will allow you to use the same server in order to perform a wide range of different tasks.

However, there is a question about the profitability of single-socket systems. It is quite common for customers to purchase dual-socket servers, which means cost savings due to fewer components such as power supplies, fans, sheet metal, etc. However, single-socket servers are much easier to maintain. And this is not their only advantage. Every day, the number of cloud providers that form a server infrastructure based on single-socket platforms increases significantly.

You should also not forget about upgrading outdated equipment. The Intel Xeon Gold 6252 processor is still a popular and powerful 2nd generation Xeon model. But it is worth recalling that if you are using Xeon E5 V4 or an older platform, then you will experience a huge difference in performance.

Conclusion

Thus, AMD EPYC Bergamo is a truly outstanding processor. The picture below shows a chip that provides 128 cores and 256 threads. If you deploy virtual machines with the same number of virtual processors (a very common case), this chip will still be capable of providing the highest density, even after the planned release of the 144-core Sierra Forest-SP component for next quarter, provided the AmpereOne processors will still be available to order. EPYC has the widest software support as well (Arm architecture cannot offer this). The new EPYC chips also support VNNI, which can be used when running AI inferences.

A rather unexpected moment was the achievement of a consolidation ratio of about 5:1 when using our two-socket, single-unit server. This may well be a strong argument for removing old servers from the data center, which will free up space for additional servers for AI. However, if you are currently planning to move from VMware products (the main reason for the transition is changes in the company's pricing policy) to virtualization systems based on KVM or Xen (which do not include socket or core licensing), we recommend paying attention to the increasingly popular Bergamo chips, which with a less than a year payback period may affect your previous decisions.

Start a chat with our experts and get a customized solution for your needs ↘️